Main steps of starting a project

Project planning

Specify the aim of the project and the necessary computational tasks. Estimate the required computational resources (e.g. CPU cores, memory, storage) and execution time of the tasks based on previous runs.

Project application

Provide the necessary information about the project on the HPC Portal:

Name of the project

Aims of the project and the expected achievements

Requested resources

For more information see the “Project application” chapter and the HPC Portal User Manual

Project approval

The HPC Competence Centre provides technical support regarding the project applications (e.g. supplement requests) if necessary.

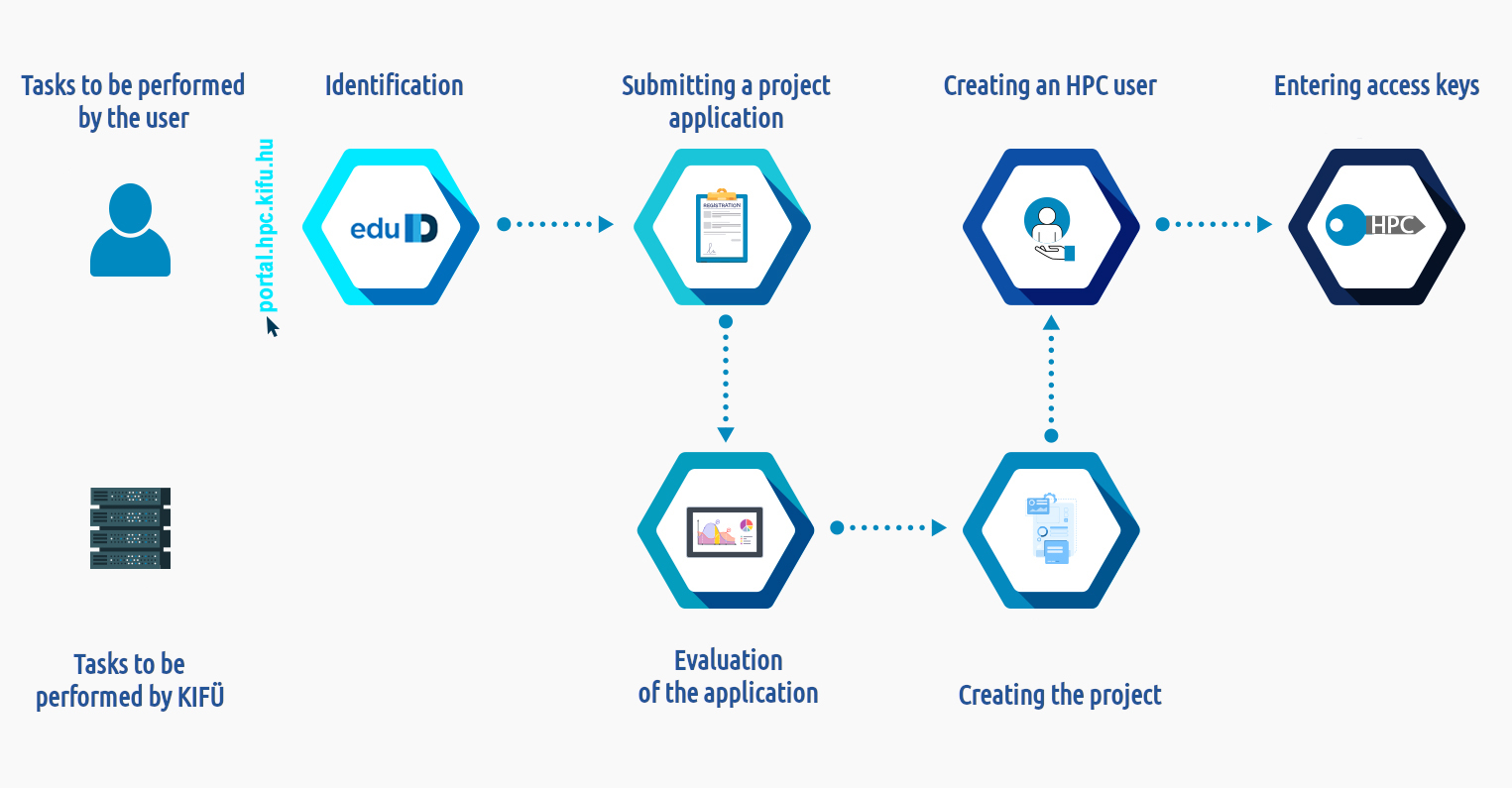

Workflow of the HPC project application:

Project preparation

Create a project directory on the login node to store all the necessary files (data, source code, software installations, etc.). Make sure the project directory has appropriate permissions.

Take into consideration the specifics of the different type of storage available and the applied quota restrictions while preparing the project.

Resource planning

Select the HPC partition (job queue) suitable for the project tasks. Each partition has a specific resource profile.

Avaliable partitions:

CPU

GPU

AI

BigData

For more information see the “Available partitions” chapter.

Task preparation

Write or prepare a program that you would like to execute on the HPC. It can be a scientific simulation, data analysis or any other task which can utilize HPC resources.

Job preparation

Prepare a SLURM script (.sbatch file) or an executable shell script (.sh) file as a job definition.

You have to specify the required resources, the execution time, the name of the output files, etc. You will use this script to submit the job to the SLURM scheduler.

SLURM script example:

#!/bin/bash

#SBATCH -A <projectname>

#SBATCH --partition=cpu

#SBATCH --job-name=<jobname>

#SBATCH --time=01:30:00

#SBATCH --mem-per-cpu=3000

#SBATCH --mail-type=ALL

#SBATCH --mail-user=<emailaddress>

<command>

Another example of job specification:

#SBATCH -c 8

#SBATCH -n 16

#SBATCH --ntasks-per-node 8

For parallel jobs it is needed to specify the number of required tasks (i.e. parallel processes), and to the parallel using srun :

#SBATCH -n 16

srun <command>

Job submission

Submit the SLURM script to the SLURM scheduler using the sbatch command.

For example:

sbatch my_slurm_script.sbatch

It will launch the job on the HPC system with the requested resources.

Job monitoring

Check the state of the job with the squeue or the sacct command.

For example:

sacct --format=jobid,elapsed,ncpus,ntasks,state

Concepts relating the SLURM scheduler can be found here:

For more information see the User Documentation