Lustre

Lustre is a scalable, parallel distributed data storage architecture for clusters. Its central element is the Lustre file system, which provides users with a standard POSIX interface. Its best-known use is related to supercomputers; it can be found on the largest HPC clusters worldwide. Among the reasons for this, we can mention that it can be scaled in both capacity and performance, enables active/active high-availability operation, and supports a wide range of high-performance and low-latency network technologies.

Lustre Components

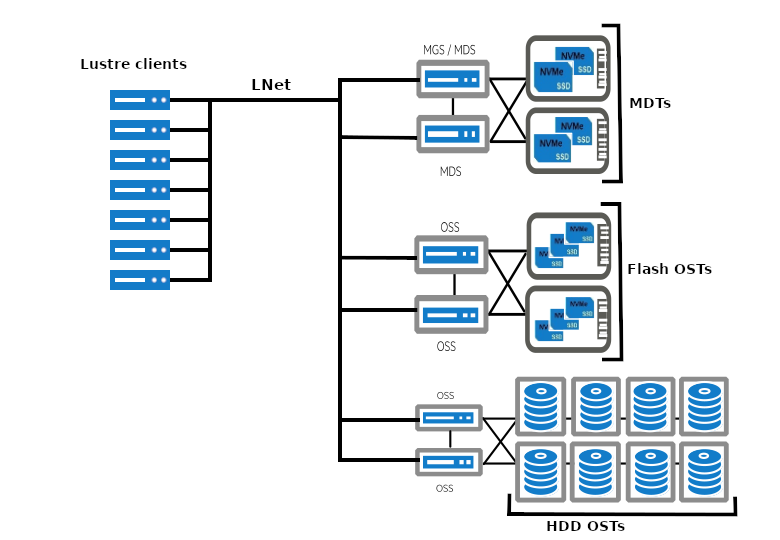

- A Lustre system consists of the following components:

- MGS (Management Server) / MGT (Management Target)

Responsible for configuration management. In practice, it is often not an independent server but part of the high availability (HA) MDS pair that also provides the root directory of the file system. This is the case with Komondor as well.

- MDS (Metadata Server) / MDT (Metadata Target)

The metadata server provides the MDTs to the clients. Metadata linked to the filesystem namespace is stored on MDTs, such as file names, attributes, and file layout (i.e., in which OST objects the file is stored).

- OSS (Object Storage Server) / OST (Object Storage Target)

Data is stored on one or more OSTs in the form of OST objects. OSS provides access to the OSTs it manages. Several types of OST (disk, flash — see figure) can exist within a system.

- Clients

Clients (e.g. compute, visualization or login nodes) can read/write the Lustre filesystem after mounting it.

- LNet (Lustre networking)

LNet provides communication and routing between elements of the Lustre system over various physical layers. In case of Komondor, communication is based on a Slingshot network.

Useful Knowledge

Striping

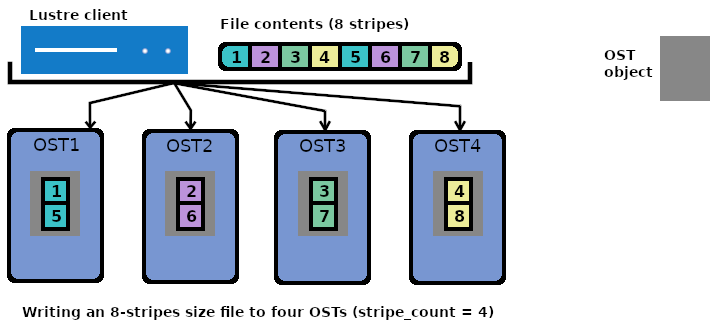

A major contributor to the high performance of the Lustre filesystem is its ability to stripe data across multiple OSTs. By using multiple OSTs, the performance of both writing and reading can be improved for large files.

The stripe_count parameter indicates the number of stripes, i.e., the number of OSTs to stripe over. The stripe_size parameter determines how much data to write to one OST before moving to the next one. There is a default value for each of these parameters in the filesystem (1 for stripe_count and 1MiB for stripe_size), which can then be overridden either for directories or individual files.

As shown in the figure, when writing a file, chunks of stripe_size (stripes) are written to the OSTs one after the other. The data content is written to one allocated OST object each on the four OSTs. Here, all OST objects receive two stripes each.

Setting the File Layout/Striping Configuration

Use the following command to create a file with a specific layout or change the striping configuration for an already existing directory (which will affect future files created in them):

lfs setstripe -c <stripe_count> -S <stripe_size> <path to file or directory>

Set striping for an already existing directory (stripe_count = 2 and stripe_size = 4MiB):

lfs setstripe -c 2 -S 4M ./testdir-2s-4M

Create a new file with a specific layout that differs from the default striping configuration (stripe_count = 2 and stripe_size = 4MiB):

lfs setstripe -c 2 -S 4M ./testfile-2s-4M

Check Striping

Given the example above, let’s check the striping settings for the directory:

$ lfs getstripe ./testdir-2s-4M

./testdir-2s-4M

stripe_count: 2 stripe_size: 4194304 pattern: raid0 stripe_offset: -1

As we can see, the setting applied to the directory is as expected. If we now create a file inside it, the file inherits these settings:

$ touch ./testdir-2s-4M/tf01

$ lfs getstripe ./testdir-2s-4M/tf01

./testdir-2s-4M/tf01

lmm_stripe_count: 2

lmm_stripe_size: 4194304

lmm_pattern: raid0

lmm_layout_gen: 0

lmm_stripe_offset: 3

obdidx objid objid group

3 153237056 0x9223640 0

5 45412113 0x2b4ef11 0

lfs getstripe shows that the file indeed inherited the settings. OST003 and OST005 have been designated to receive file content.

Given the example above, let’s check the striping settings for the file that was created:

$ lfs getstripe ./testfile-2s-4M

./testfile-2s-4M

lmm_stripe_count: 2

lmm_stripe_size: 4194304

lmm_pattern: raid0

lmm_layout_gen: 0

lmm_stripe_offset: 5

obdidx objid objid group

5 45412112 0x2b4ef10 0

0 153553920 0x9270c00 0

$

As we can see, file contents written into testfile-2s-4M are going to OST005 and OST000 in 4MiB stripes.

Performance Considerations (striping)

Warning

The striping settings can greatly affect the performance of file operations, so adjust them carefully according to the I/O pattern of the application and the size of the files!

Stripe count

- Increase stripe_count when:

working with big (> 1GiB) files

multiple processes work on a single file (Single Shared File)

Tip

Always use the system default for small files! (stripe_count = 1)

Do not stripe over OSTs if the I/O pattern is File-Per-Process!

Stripe size

stripe_size can have an impact on performance when working with big files, though far less than stripe_count.

Tip

min. recommended value: 512KiB

for most of the cases, a value between 1MiB and 4MiB is sufficient

Warning

stripe_size must be a multiple of 64KiB!

Note

stripe_size has no impact if stripe_count = 1

If your application writes to the file in a consistent and aligned way, make the stripe size a multiple of the write() size. The goal is to perform write operations that go entirely to one server instead of crossing object boundaries.